Wikipedia defines Master Data Management as "the processes, governance, policies, standards and tools that consistently define and manage the critical data of an organization to provide a single point of reference". Customer data is at the heart of every organization and is the most complicated data reference to master.

Traditional approaches leverage data warehousing techniques to cleanse, standardize and journal change history.Centralized data integration needs policies, standards and procedures to assure compliance, to preserve data quality and mitigate decision risk. Focused on the enterprise asset, a single view of the customer reference is created. For the enterprise, this works but, for the individual lines of business, probably not. The attributes, processes and techniques that each region has cultivated over time simply cannot fit or are too expensive to tailor into a master entity. And the unique execution styles that made those departments successful in the past are quickly overridden with directives to support centralized policies.

Federation is a process where a view of the data is provisioned without replication of the underlying sources, analogous to referring to a web-site with a URL instead of copying the content. A federated approach allows and encourages firms to leave data in the source system that is appropriate for it to operate in. Should there be a specific need to augment, modify, enrich, or correct source data elements, business rules can be employed to provide that capability. No rip-and-replace required. This means that regional efforts can move at their own pace and satisfy their specific needs without complex schedules or onerous overhead.

Compliance requirements vary by region as well, and satisfying these requirements forms a mandatory project for many organizations. Leveraging this as a mechanism to assure data quality requires not only data federation but process federation as well: the ability to accommodate specific business processes but reuse and reapply selected components in an enterprise setting. The spend required to implement this must be very low and likely necessitates a vendor-driven product approach that provides the required data and process automation capabilities, replacing manual resource efforts.

Using federation techniques to empower departments to meet operational needs, with data quality assured through compliance projects, could be viewed as investments in data centralization. To justify this investment, gains in operational efficiencies, spend reduction or cost curtailment need to be identified. Analytics can offset the investment in data centralization through optimization techniques applied to spend, cost or time reduction. Ideally these analytics could be deployed at the departmental level to further expedite operations or tailored to the enterprise to identify additional gains or savings.

Quickly assembling an enterprise model from departmental assets requires incredible flexibility and agility above and beyond traditional synthesis techniques. Not only must we understand and protect individual line of business initiatives but assemble a federated view. Some elements will be common or at least common enough to easily embrace, others will deserve the attention required to cast them to an enterprise asset, and some may benefit from rules-centric calculation (computed value).

Iterative model development that quickly delivers content for analytic work stream evaluation would allow both processes to work collaboratively. Not only would the enterprise asset serve its primary function as a single reference set, but it could also be employed as a primary data acquisition asset. Data acquisition traditionally comprises two-thirds of an analytics project, where mostly manual processes are applied to structure - aligning change capture elements into appropriate time series for example.

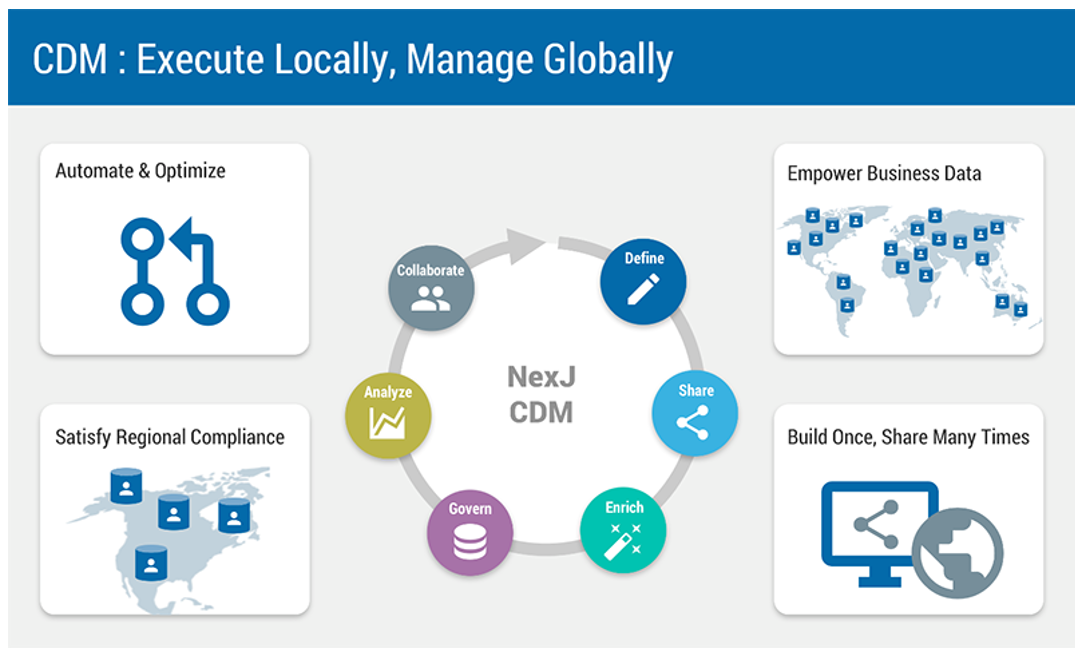

CDM (customer data management) is designed to share content immediately across many different platforms. Business units can see their results in their portals, embedded into their existing applications, or in reports and analytics projects built directly off the data. CDM tracks source system data changes and provisions datasets ready for query. CDM (Customer Data Management) is flexible; data elements can always be added on at a later date. But the more history you have to draw on, the fewer course corrections your models will require.